Us vs. Them: Understanding Artificial Intelligence Technophobia over the Google DeepMind Challenge Match

The advancement of machine learning, the explosive increase of accumulated data, and the growth of computing power have yielded artificial intelligence (AI) technology comparable to human capabilities in various fields. It allows common users to interact with intelligent devices or services using AI technology, such as Apple’s Siri and Amazon’s Echo. It is expected that AI technology will become increasingly prevalent in various areas, such as autonomous vehicles, medical treatment, game playing, and customized advertisements.

However, some people fear AI. Many scientists, including Stephen Hawking and Ray Kurzweil, have expressed concerns about the problems that could arise in the age of AI. According to an online survey conducted by the British Science Association (BSA), about 60% of respondents believe that the use of AI will lead to fewer job prospects within 10 years, and 36% believe that the development of AI poses a threat to the long-term survival of humanity. Such fears could lead to a downright rejection of technology, which could have negative effects on individuals and society. Therefore, making a sincere attempt at understanding users’ views on AI is important in the area of human-computer interaction (HCI).

GOOGLE DEEPMIND CHALLENGE MATCH

The Google DeepMind Challenge Match was a Go match between Lee Sedol, a former world Go champion, and AlphaGo, an AI Go program. It took place in Seoul, South Korea, between March 9 and 15, 2016. Since Go has long been regarded as the most challenging classic game for AI, the match brought a lot of attention from AI and Go communities worldwide. The match consisted of five games, and by winning four of the five games, AlphaGo became the final winner of the match. A detailed explanation of the players is as follows:

- AlphaGo is a computer Go program developed by Google DeepMind. Its algorithm uses a combination of a tree search and machine learning with extensive training from human expert games and computer self-play games. Specifically, it uses a state-of-the-art Monte Carlo tree search (MCTS) guided by two deep neural networks: the “value network” to evaluate board positions and the “policy network” to select moves. It is known as the most powerful Go-playing program ever, and the Korea Baduk Association awarded it an honorary 9-dan ranking (its highest).

- Lee Sedol is a South Korean professional Go player of 9 dan rank. He was an 18-time world Go champion, and he won 32 games in a row in the 2000s. Although he is no longer the champion, he is still widely acknowledged as the best Go player in the world. Unlike the traditional Go playing style of slow, careful deliberation, he reads a vast number of moves and complicates the overall situation, finally confusing and annihilating his opponent. This style created a sensation in the Go community. Many Koreans consider him a genius Go player.

The result of the match was a big surprise to many people, as it showed that AI had evolved to a remarkable level, even outdoing humanity in an area requiring advanced intelligence. After the first game, Demis Hassabis, the DeepMind founder, posted the following tweet: #AlphaGo WINS!!!! We landed it on the moon. This implied that it was a very important moment in the history of AI research and development.

However, in Korea where the game was held, the defeat of Lee caused a tremendous shock. This was partly due to the cultural implications of Go in Korea and its popularity. Go is considered one of the most intellectual games in East Asian culture, and it is extremely popular in Korea. Many people were very interested in the match before and after the event. In addition, people’s expectation of Lee’s victory was huge. Since most people expected that Lee would win the game, they could not accept the result that AlphaGo, the AI Go program, had defeated Lee, the human representative. Every match day, all national broadcasting stations in Korea reported the shocking news as top stories. Throughout the country, both online and offline, people talked about the event, expressing fear of AI as well as AlphaGo. We believed that the public discussion on this event could provide a unique opportunity to assess and understand people’s fear of AI technology. Therefore, we tried to investigate the underlying implications of this event, and we designed and conducted a user study accordingly.

METHODOLOGY

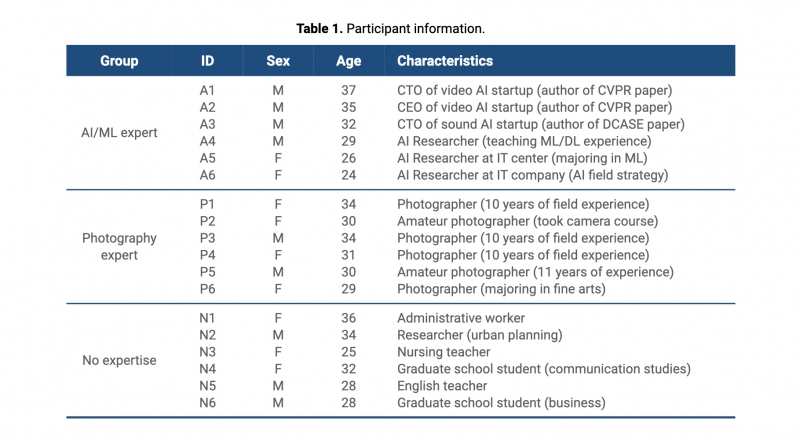

To obtain insights about people’s fear of AI, we designed and conducted semi-structured interviews with 22 participants from diverse backgrounds.

Participant Recruitment

The interviews were designed to identify participants’ fear of the match and obtain diverse opinions about AI. Inclusion criteria included basic knowledge of the match and experience watching the match through media at least once. In addition to this requirement, a demographically representative set of participants was sought. The target ages were divided into four categories: 20s and under, 30s, 40s, and 50s and over. We also considered the occupations of participants. We recruited participants living in the Seoul Metropolitan Area, disseminating the recruitment posters at local stores, schools, and community centers. At first, we recruited 15 participants who saw the posters and contacted us directly. Then we recruited seven additional participants through snowball sampling and contacts of the researchers so that we had an evenly spread group of participants in terms of age, gender, and occupation. A total of 22 diverse participants were recruited in the study, as shown in Table 1. The participants were each given a $10 gift card for their participation.

Interview Process

Each participant took part in one semi-structured interview after the entire match was over. As we aimed to collect various ideas on AI without prompting participants, we intentionally left the term AI undefined before and during the interview. Considering this, we designed the interview questions, and each interview was guided by the following four main issues: the participants’ preexisting thoughts and impressions of AI, changes in their thoughts as the match progressed, impressions of AlphaGo, and concerns about a future society in which AI technology is widely used. As we sought to identify participants’ thoughts over time, we carefully designed the questions separately according to the match schedule and provided detailed information of the match so that the participants could situate themselves in the match context. Then, to further induce the participants’ diverse and profound thoughts, we provided them with 40 keyword cards covering various issues related to AI, which were extracted from AI and AlphaGo Wikipedia articles. We showed the cards in a set to the participants and let them pick one to three cards so that they could express their thoughts about certain issues they otherwise might have missed. We conducted the interviews at places of each participant’s choosing, such as cafes near their offices. Each interview took about an hour. The participants responded more actively during the interviews than the authors had expected.

Interview Analysis

Interviews were transcribed and analyzed using grounded theory techniques. The analysis consisted of three stages. In the first stage, all the research team members reviewed the transcriptions together and shared their ideas, discussing main issues observed in the interviews. We repeated this stage three times to develop our views on the data. In the second stage, we conducted keyword tagging and theme building using Reframer, a qualitative research software provided by Optimal Workshop. We segmented the transcripts by sentence and entered the data into the software. While reviewing the data, we annotated multiple keywords in each sentence so that the keywords could summarize and represent the entire content. A total of 1,016 keywords were created, and we reviewed the labels and text again. Then, by combining the relevant tags, we conducted a theme-building process, yielding 30 themes from the data. In the third stage, we refined, linked, and integrated those themes into five main categories, described below.

The research design protocol was reviewed and approved by the Institutional Review Board of Seoul National University (IRB number: 1607/003-011), and we strictly followed the protocol. All interviews were recorded and transcribed in Korean. The quotes were translated into English, and all participants’ names were replaced by pseudonyms.

FINDINGS

The interviews revealed that participants felt fear related to the match and AI and had a confrontational relationship as in “us vs. them.” People had preconceived stereotypes and ideas about AI from mass media, and their thoughts changed as the match progressed. Furthermore, people not only anthropomorphized but also alienated AlphaGo, and they expressed concerns about a future society where AI will be widely used.

Preconceptions about Artificial Intelligence

We identified that people had preconceptions and fixed ideas about AI: AI is a potential source of danger, and AI should be used to help humans.

Artificial Intelligence as Potential Threat

Throughout the interviews, we identified that the participants had built an image of AI in their own way, although they had rarely experienced AI interaction firsthand. When asked about their thoughts and impressions of the term AI, most of the participants described experiences of watching science fiction movies. They mentioned the specific examples, such as Skynet from Terminator (1984), Ultron from The Avengers (2015), Hal from 2001: A Space Odyssey (1968), sentient machines from The Matrix (1999), and the robotic boy from A.I. Artificial Intelligence (2001). In addition, P15 described a character from Japanimation from his youth. The characters were varied, from a man-like robot to a figureless control system.

Notably, the participants formed rather negative images from their media experiences, since most of the AI characters were described as dangerous. Many AI characters in the science fiction movies that the participants mentioned controlled and threatened human beings, which seemed to reinforce their stereotypes. Some of the participants agreed that this might have affected the formation of their belief that AI is a potential source of danger.

Meanwhile, the movie experiences made people believe that the AI technology is not a current issue but one to come in the distant future. Generally, the movies mentioned by participants were set in the remote future, and their stories were based on futuristic elements, such as robots, cyborgs, interstellar travel, or other technologies. Most of the technologies described in the movies are not available at present. For example, P14 said, “The Artificial intelligence in the movie seemed to exist in the distant future, many years from now.”

Artificial Intelligence as a Servant

It was found that many participants had established their own thoughts about the relationship between humans and AI. They believed that AI could not perform all the roles of people. However, they thought that AI could perform arduous and repetitive tasks and conduct those tasks quickly and easily. For example, P17 said, “They can take on tasks that require heavy lifting, and they can tackle complex problems.”

The expected roles of AI the participants mentioned were associated with their perceptions of its usefulness. Although some of the participants regarded AI as a potential threat, they partially acknowledged its potential convenience and abilities. For example, P15 said, “They are convenient; they can help with the things humans have to do. They can do dangerous things and easy and repetitive tasks, things that humans do not want to do.”

However, this way of thinking suggests a sense of human superiority in the relationship at the same time. More specifically, P13 commented, “Man should govern artificial intelligence. That is exactly my point. I mean, AI cannot surpass humans, and it should not.”

Confrontation: Us vs. Artificial Intelligence

Changes in participants’ thoughts were observed according to the result of each game of the match. People indicated fear of AlphaGo at first, but as the match progressed, they began to cheer for Lee Sedol as a human representative. In this process, people tended to have a confrontational relationship with AlphaGo of an “us vs. them” type.

Prior to the Match: Lee Can’t Lose

Before the match began, all the participants but two expected that Lee Sedol would undoubtedly win. For example, P12 said, “I thought that Lee Sedol would win the game easily. I believed in the power of the humans.” P04 said, “Actually, I thought Lee would beat AlphaGo. I thought even Google wouldn’t be able to defeat him.” This showed his tremendous belief in Lee Sedol. The conviction about Lee’s ability to win was almost like blind faith. During the interviews, we described AlphaGo’s ability for the participants in detail, explaining its victory in the match with the European Go Champion and its capacity in terms of its overwhelming computing power and its learning ability. However, some of them said they already knew the information but still thought that AI could not win the game. Some participants provided us with several reasons for their conviction. P15 suggested the complexity of Go as a reason. He explained that the number of possible permutations in a Go game is larger than the number of atoms in the universe.

Game 1: In Disbelief (Lee 0:1 AlphaGo)

Even though many people expected that Lee would win the match, he lost the first game by resignation, which shocked many people in Korea. Although Lee appeared to be in control throughout much of the match, AlphaGo gained the advantage in the final 20 minutes and Lee resigned. He said that the computer’s strategy in the early part of the game was “excellent.” Since the participants had been convinced of Lee’s victory, their shock was far greater. P05 said, “I thought, this can’t happen. But it did. What a shock!” P04 also showed his frustration, saying, “AlphaGo won by a wide margin. It was shocking. Lee is the world champion. I couldn’t understand how this could be.”

In addition, some participants said that their attitudes toward the match changed after the first game. Before the game, they just intended to enjoy watching an interesting match; however, when they saw the result, they began to look at the game seriously. P22 commented, “After the first game, I realized it was not a simple match anymore.” Furthermore, some participants began to think that Lee might possibly lose the remaining games. On the other hand, some participants thought that Lee still had a 50-50 chance of winning. P13 said, “I thought mistakes caused Lee’s defeat. People can make mistakes. If he can reduce his mistakes, he can win the remaining games.”

Game 2: We Can’t Win (Lee 0:2 AlphaGo)

Lee suffered another defeat in the second match, and people began to realize AlphaGo’s overwhelming power. During the post-game interview, Lee stated, “AlphaGo played a nearly perfect game from the very beginning. I did not feel like there was a point at which I was leading.” Now, people started to regard AlphaGo as undefeatable. P04 said, “Lee was defeated in consecutive games. It was shocking. I began to acknowledge AlphaGo’s perfection. Actually I didn’t care that much when Lee was beaten in the first round. But after the second round, I had changed my mind. I realized AlphaGo could not be beaten, and it was terrifying.” P01 commented, “Before the match, I firmly believed Lee would win. But the second game totally changed my mind. It was really shocking.” P02 also noted, “After the second match, I was convinced that humans cannot defeat artificial intelligence.”

Game 3: Not Much Surprise (Lee 0:3 AlphaGo)

When AlphaGo won the first three consecutive games, it became the final winner of the best-of-five series match. Interestingly, the impact of the third game was not as strong as that of the second or the first. As people had already witnessed AlphaGo’s overwhelming power, most of them anticipated that Lee would lose again, and the result was no different from what they had expected. For example, P14 said, “He lost again… yeah I was sure he would lose.” People became sympathetic and just wanted to see Lee win at least once. P07 said, “I wanted to see just one win. I was on humanity’s side. ‘Yes, I know you (AlphaGo) are the best, but I want to see you lose.’ “ P01 said, “I didn’t expect that Lee could win the remaining games. But I wished he would win at least once.”

Game 4: An Unbelievable Victory (Lee 1:3 AlphaGo)

Surprisingly, in the fourth game, Lee defeated AlphaGo. Although Lee struggled early in the game, he took advantage of AlphaGo’s mistakes. It was a surprising victory. AlphaGo declared its surrender with a pop-up window on its monitor saying “AlphaGo Resigns.” The participants expressed joy over the victory and cheered for Lee. Some of them saw the result as a “triumph for humanity.” P14 said, “He is a hero. It was terrific. He finally beat artificial intelligence.” P09 said, “I was really touched. I thought it was impossible. AlphaGo is perfect, but Lee was victorious. It was great. He could have given up, but he didn’t, and he finally made it.”

Game 5: Well Done, Lee (Lee 1:4 AlphaGo)

AlphaGo won the fifth and final game. Before the beginning of the game, the participants mainly thought that AlphaGo would win. However, at the same time, since they had seen the victory of Lee the day before, they also slightly anticipated Lee’s win. P10 said, “In a way, I thought Lee still had a chance to win the last game. I thought as AlphaGo learned, Lee might learn and build a strategy to fight.” On the one hand, some participants were already satisfied by the one win. They were relieved that the game was over. P08 said, “Anyhow, I was contented with the result the day before. The one victory made me relieved. I watched the last game free from all anxiety.” P12 also stated, “Lee played very well. I really respect his courage.”

To sum up, throughout the five-game match, people were immensely shocked and apprehensive at first, but they gradually began to cheer for Lee Sedol as a human representative as the match progressed. The participants thought that Lee and AlphaGo had the fight of the century, humanity vs. AI. Through this match, people were able to recognize what AI is and how the technology has been developed. The participants also identified various characteristics of AI generally as well as AlphaGo specifically.

Anthropomorphizing AlphaGo

Throughout the interviews, we observed that the participants anthropomorphized AlphaGo. They referred to AlphaGo as if it were a human and differentiated AI technology from personal computers. They also thought AlphaGo was creative, which is usually considered a unique characteristic of human beings.

AlphaGo is an “Other”

When describing their thoughts and impressions of AlphaGo, people always used the name “AlphaGo” as if it were a human being. Moreover, they often used verbs and adjectives commonly used for humans when mentioning AlphaGo’s actions and behaviors, such as “AlphaGo made a mistake,” “AlphaGo is smart,” “AlphaGo learned,” and “AlphaGo practiced,” which indicates a tendency of anthropomorphization. One participant even called AlphaGo “buddy” from the beginning to the end of the interview. When asked if there was a special reason for this, she said, “I know it is a computer program. But he has a name, ‘AlphaGo.’ This makes AlphaGo like a man, like ‘Mr. AlphaGo.’ I don’t know exactly why, but I think because of his intelligence, I unconsciously acknowledged him as a buddy. ‘You are smart. You deserve to be my buddy.’ “ P06 went so far as to call AlphaGo someone we should acknowledge as our superior, saying, “After the third game, I realized that we were bust. We had lost. AI is the king. We should bow to him.”

AlphaGo Is Different from a Computer

Moreover, we identified that the participants anthropomorphized AI, as well as AlphaGo specifically, by drawing a sharp distinction between personal computers and AI technology. The participants uniformly described the two as different. While they regarded the computer as a sort of tool or implement for doing certain tasks, they described AI as not a tool but an agent capable of learning solutions to problems and building its own strategies. They thought that we could control a computer as a means to an end but that we could not control AI. They said AI knows more than we do and thus can undertake human work. P07 said, “I think they (computers and AI) are different. I can’t make my computer learn something. I just use it to learn something else. However, artificial intelligence can learn on its own.” P08 also commented, “When I first learned about computers, I thought they were a nice tool for doing things quickly and easily. But artificial intelligence exceeds me. AI can do everything I can do, and I cannot control it. The main difference between computers and AI is our ability to control it.”

AlphaGo is Creative

Some of the participants said AlphaGo’s Go playing style was somewhat creative, since AlphaGo made unorthodox, seemingly questionable, moves during the match. The moves initially befuddled spectators. However, surprisingly, the moves made sense in hindsight and determined the victory. In other words, to the viewers, AlphaGo calculated the moves in a different way from human Go players and thus finally won the game. Some participants thought that AlphaGo showed an entirely new approach to the Go community. P09 said, “It was embarrassing. AlphaGo’s moves were unpredictable. It seemed like he had made a mistake. But, by playing in a different way from men, he took the victory. I heard he builds his own strategies by calculating every winning rate. People learn their strategies from their teachers. But AlphaGo discovered new ways a human teacher cannot suggest. I think we should learn from AlphaGo’s moves.” P11, an amateur 7-dan Go player and Go Academy teacher, also demonstrated this view based on his own experience. In the past, he had learned Go through the apprentice system. Although he learned the basic rules of Go, for him, learning was imitating the style of his teacher or the best Go players. His learning was focused not on how to optimize the moves with the highest winning rate but on how to find the weak spot of the champion of the day. He said, Go styles also have followed the main trend when a new champion appears. However, AlphaGo’s moves were entirely different from this Go style and trend, which seemed creative and original to P11.

Alienating AlphaGo

People also alienated AlphaGo by evaluating it with the characteristics of a human. They sometimes showed hostility toward AlphaGo and reported feeling negative emotions toward it.

AlphaGo is Invincible

All participants agreed that AlphaGo, “compared to a human being,” has an overwhelming ability. AlphaGo was trained to mimic human play by attempting to match the moves of expert players from recorded historical games, using a database of around 30 million moves. Once it had reached a certain degree of proficiency, it was trained further by playing large numbers of games against other instances of itself, using reinforcement learning to improve its play. The participants concurred with the idea that a human’s restricted calculation ability and limited intuition cannot match AlphaGo’s powerful ability. P22 said, “Lee cannot beat AlphaGo. AlphaGo learns ceaselessly every day. He plays himself many times a day, and he saves all his data. How can anyone beat him?” P15 said, “I heard that AlphaGo has data on more than a million moves, and he studies the data with various approaches for himself. He always calculates the odds and suggests the best move, and he can even look further ahead in the game than humans. He knows the end. He knows every possible case.” P15 even argued that the match was unfair. He contended that unlike Lee Sedol, who was trying to win alone, AlphaGo was linked to more than 1,000 computers, and this made its computation power far superior to that of human beings. For these reasons, he insisted that Lee was doomed from the beginning of the game and that the result should also be invalid. “It was connected with other computers… like a cloud? Is it the right word? It is like a fight with 1,000 men. Also, computers are faster than humans. It is unfair. I think it was unfair.”

AlphaGo is Ruthless

Throughout the match, the participants referred to AlphaGo’s ruthlessness and heartlessness, which are “uncommon traits in humans.” Usually, when pro Go players play the game, a subtle tension arises between the players. Identifying the opponent’s feelings and emotions could be significant, and emotional elements can affect the result of the match. However, AlphaGo could never express any emotion throughout the match. P20, who introduced himself as having a profound knowledge of Go, commented that there was not a touch of humanity about AlphaGo’s Go style. He said, “AlphaGo has no aesthetic sense, fun, pleasure, joy, or excitement. It was nothing like a human Go player. Most pro Go players would never make moves in that way. They leave a taste on the board. They play the game with their board opened. But AlphaGo tried to cut off the possibility of variation again and again.” At that time, the term “AlphaGo-like” became widely used as an adjective in Korea, meaning ruthless, inhibited, and emotionally barren. One of our participants, P07, used the term: “Since the match, I often call my husband ‘Alpha Park’ because there is no sincerity in his words.”

“Since the match, I often call my husband ‘Alpha Park’ because there is no sincerity in his words.”

AlphaGo is Amorphous

“Unlike human Go players,” AlphaGo has no form and only exists as a computer program, which left a deep impression on the viewers. Since AlphaGo is only an algorithm, it showed its moves through the monitor beside Lee. Then, Aja Huang, a DeepMind team member, placed stones on the Go board for AlphaGo, which ran through Google’s cloud computing, with its servers located in the United States. At first, people wondered who AlphaGo was. Some participants thought Aja Huang was AlphaGo, modeled on the human form. P03 said, “My mom said she mistook Aja Huang for AlphaGo. I think people tend to believe that artificial intelligence has a human form, like a robot. If something has intelligence, then it must have a physical form. Also, artificial intelligence is an advanced, intelligent thing like a human, which makes people think its shape must also be like that of a human.” One participant even believed Aja Huang was AlphaGo until the interview. She said, “Wasn’t he AlphaGo? I didn’t know that. It’s a little weird, don’t you think?”

AlphaGo Defeats Man

All participants said that AlphaGo induced negative feelings toward AI. As described above, usually, AI was still considered something that would only occur in the distant future. However, the AlphaGo event showed that the technology is already here. The event made people realize that AI was near. P08 said, “Although Lee won once, he finally lost. This is a symbolic event of artificial intelligence overpowering humans. I’m sure this event made me feel the power of artificial intelligence in my bones.” P07 also said, “Before I watched the match, I had no idea about who was developing artificial intelligence and how much the technology had developed. But now I know a few things about artificial intelligence. AlphaGo taught me how powerful the technology is.”

In this regard, we observed that AlphaGo affected the formation of people’s negative emotional states. Some of the participants told us that they felt helplessness, disagreeability, depression, and a sense of human frailty and suffered from stress while watching the match. Furthermore, they said the result of the match knocked their confidence and increased their anxiety. If this was not true of themselves, they said they commonly saw the people around them suffering for the same reason. P03 noted, “AlphaGo can easily achieve any goal. But I have to devote my entire life to reaching a goal. No matter how hard I try, I cannot beat AI. I feel bad and stressed. I’ve lost my confidence.” P08 also stated, “The human champion was defeated by AlphaGo. He was completely defeated. Humans cannot catch up with artificial intelligence. I started to lose my confidence and feel hostility toward artificial intelligence. I became lethargic.” P20 said that, “If I had a chance to compete with AlphaGo, I think I would give up because it would be a meaningless game.”

Concerns about the Future of AI

After witnessing the unexpected defeat of Lee Sedol, people also raised concerns over a future society where AI technology is prevalent. They especially worried that they would be replaced by AI and not be able to follow and control the advancement of AI.

Man is Replaced

People expressed their worry that AI will one day be able to perform their jobs, leaving them without work. They worried that as AI will be widely developed in many different fields, the technology will surpass the human endeavors in these areas. They thought that, as a result, because of the comparative advantages, AI will be preferred, and the demand for human labor will decrease. Moreover, they believed the problem of the replacement of humans is not confined to simple, and repetitive tasks. They thought it could happen in the specialized occupations, such as lawyers and doctors. For example, P08, a lawyer, recently had a talk about this issue with his colleagues. He said, “Actually, lawyers have to perform extensive research into relevant facts, precedents, and laws in detail while writing legal papers. We have to memorize these materials as much as we can. But we can’t remember everything. Suppose they created an AI lawyer. He could find many materials easily, quickly, and precisely. Lawyers could be replaced soon.”

Fear of losing jobs raised the question of the meaning of human existence. Some participants said they felt the futility of life. P06 said, “We will lose our jobs. We will lose the meaning of existence. The only thing that we can do is have a shit. I feel as if everything I have done so far has been in vain.” P13 showed extreme hostility toward AI, saying, “If they replace humans, they are the enemy,” which is reminiscent of the Luddites, the movement against newly developed laboreconomizing technologies in the early 19th century.

They worried that AI will also encroach on the so-called creative fields, the arts, which are regarded as unique to human beings. Some participants talked about a few news stories indicating that AI can perform comparably to human beings in painting, music composition, and fiction writing. They thought that there is nothing that human beings can do in such situations. P01 described his thoughts about this AI’s encroachment on the art area based on his anecdote of seeing software that automatically transforms any image into Van Gogh’s style. He said, “Seeing AI invade the human domain broke my stereotype.”

If human beings were replaced by AI in all areas, what would we do then? We also found this question raised with respect to education. P16, a middle school teacher, explained her difficulty in career counseling and education programs for students. She said, “The world will change. Most jobs today’s children will have in the future have not been created yet.” Since she could not anticipate which jobs would disappear and which ones would be created in the future, she felt skeptical about teaching with the education content and system designed based on contemporary standards.

Singularity is Near

Some participants expressed their concerns about a situation in which humans cannot control the advancement of AI technology. This worry is related to the concept of the technological singularity, in which the invention of artificial superintelligence will abruptly trigger runaway technological growth, resulting in unfathomable changes to human civilization. According to the singularity hypothesis, an autonomously upgradable intelligent agent would enter a ‘runaway reaction’ of self-improvement cycles, with each new and more intelligent generation appearing more and more rapidly, causing an intelligence explosion and resulting in a powerful super intelligence that would far surpass all human intelligence. After seeing AlphaGo build his own strategies that went beyond human understanding and easily beat the human champion, the participants thought that the singularity could be realized soon in every field and that humans would not be able to control the technology. P06 said, “It’s terrible. But the day will come. I can only hope the day is not today.” The participants unanimously insisted that society needs a consensus about the technology and that laws and systems should be put in place to prevent potential problems.

AI is Abused

The participants also expressed their concerns that AI technology might be misused. The AlphaGo match has demonstrated its ability to many people around the world. They worried that the overwhelming power of AI could lead some people to monopolize and exploit it for their private interests. They said that if an individual or an enterprise dominates the technology, the few who have the technology might control the many who do not. P04 said, “I think that one wealthy person or a few rich people will dominate artificial intelligence.” P01 also noted, “Of course, artificial intelligence itself is dangerous. But I am more afraid of humans, as they can abuse the technology for selfish purposes.” Some participants argued that if the technology were monopolized, the inequality between those who have it and those who do not would become more severe. For example, P13 said, “I agree with the opinion that we need to control AI. But who will control it? If someone gets the power to control the technology, he will rule everything. Then we will need to control the man who controls AI.” People’s worry about the misuse of AI eventually depends upon the decisions of man. This shows another “us vs. them” view: those who have AI vs. those who do not.

DISCUSSION

Based on our findings, we discuss the current public awareness of AI and its implications for HCI as well as suggestions for future work.

Cognitive Dissonance

According to the theory of cognitive dissonance, when people face an expected situation that is inconsistent with their preconceptions, they could experience mental stress or discomfort. We could also see the people’s fear of AI through the lens of cognitive dissonance. Through the interviews, we identified that the participants had preconceptions and fixed ideas about AI: (a) AI could be a source of potential danger, and (b) AI agents should help humans. Although these two stereotypes seem to be contradictory, one seeing AI as a potential danger and the other seeing it as a tool, they are connected in terms of control over the technology. The idea that AI could be dangerous to humans can be extended to the idea that it should be controlled so that it can play a beneficial and helpful role for us.

While watching the Google DeepMind Challenge Match, however, people might have faced situations that did not match these notions. The result of the event indicated that humans are no longer superior to AI and cannot control it, which was inconsistent with (b). People might have had difficulty dealing with the possibility of the reversal in position between humans and AI. The participants reported that they felt negative feelings, such as helplessness, disagreeability, depression, a sense of human, and stress. On the contrary, (a) was strengthened. The idea that AI could harm humans provoked people’s negative emotions in itself. Thus, it rather reinforced the negative influence of cognitive dissonance caused by (b).

Meanwhile, the negative emotional states attributed to the dissonance show that the fear of AI should not be considered in the view of traditional technophobia, which has focused on the users’ basic demographic information and everyday interactions with computers. Users’ personal experience with AI is not restricted to real interactions or experiences. Rather, it could be formed from previous media experience and based on their notions and beliefs regarding the technology. In this regard, to understand and neutralize users’ technophobia toward AI, we need to include these factors in the theory and practice and discuss ways to reduce dissonance between users’ thoughts and real AI-embedded interfaces.

Beyond Technophobia

Two of our most important findings related to certain tendencies behind people’s fear of AI: (1) anthropomorphizing and (2) alienating. They not only anthropomorphized AI as having an equal status with humans but also alienated it, again revealing hostility. While watching AlphaGo’s capacities, people regarded it as if it had human-like intelligence. People perceived AI’s capacity as being comparable to a human’s and interpreted the behaviors of AI by treating it as if it were a rational agent who controls its choice of action. However, at the same time, people also alienated AI by regarding it as different and showed hostility. They tried to find the different aspects of AI and evaluated it with the characteristics of a human being, and they dehumanized it if it was thought to be transcending or differing from such characteristics.

This tendency of anthropomorphizing and alienating AI was not a common phenomenon in their experience of computers, as the participants stated in the interviews. Since they regarded the computer as a tool to complete certain tasks, the computer problem is not related to the computer itself but mainly related to anxiety arising from interactions with it. On the contrary, people viewed AI as a being who almost has a persona. In this sense, the problem does not seem to be a technological issue but similar to communication and relationship problems among humans. In addition, they tried to find its different aspects and then alienated it. Accordingly, the fear of AI may not be a problem of technophobia but an issue similar to xenophobia, the fear of that which is perceived to be foreign or strange.

In this sense, reducing users’ fear of AI should be accomplished by understanding the communication among humans rather than simply investigating the superficial problems around the computer interface. Previous studies that proved people show similar social behavior during human-computer interaction by adapting human-human interaction also support the need for this viewpoint. In particular, in designing interfaces using AI, reflecting and considering major factors common in human communications and establishing the relationship between AI and users could be crucial for improving the user experience. According to each interface’s function and purpose, the relationship and the manner of communication between users and human-like agents (and algorithms) should be set clearly and appropriately.

Toward a New Chapter in Human-Computer Interaction

The Google DeepMind Challenge Match was not just an interesting event in which AI defeated the human Go champion. It was a major milestone marking a new chapter in the history of HCI. We found it to be an opportunity to assess and understand people’s view of AI technology and discuss considerations for HCI as we gradually integrate the AI technology within user interfaces.

AI is expected to be used in various devices and services, and users will have more chances to interact with interfaces utilizing the technology. As the term “algorithmic turn” suggests, algorithms will then play an increasingly important role in user interfaces and the experiences surrounding them. Moreover, as algorithms could be cross-linked on various interfaces, it is expected to affect users’ holistic experience, such as behavior and lifestyle. This is almost like the “environmental atmospheric” media that Hansen suggested for the twenty-first-century media.

In this respect, we suggest to the HCI community the concept of “algorithmic experience” as a new stream of research on user experience in AI-embedded environments. It encompasses diverse aspects of longitudinal user experience with algorithms that are environmentally embedded in various interfaces. This suggests considering long-term relationships with algorithms rather than the simple usability and utility of interfaces. Regarding interfaces, we need to extend their borders to the various devices and services to which algorithms could be applied. In terms of the user, user experience should not be restricted to simple interactions with machines or computers but should be extended to communication and relationship building with an agent. We believe this new concept can help the HCI community to accept and integrate AI into UI and UX design. It calls for a collaborative research effort from the HCI community to study users and help them adapt to a future in which they interact with algorithms as well as interfaces.

Coping with the Potential Danger

Although it is not within the scope of this study to address ways of coping with the potential danger of AI, we cannot neglect the gravity of the issue. As shown in the interviews, people revealed their concerns about AI threatening their lives and existence. This shows that the AI problem is not restricted to individuals, and it needs to be addressed as a social problem. Participants insisted that there should be an institutional policy, encompassing law and common ethics, regarding AI. In addition, they argued that sufficient discussions should take precedence when doing so. Recently, it was reported that the world’s largest tech companies, all of which are also closely linked to HCI fields, agreed to make a standard of ethics around the creation of AI. This movement signifies the importance of understanding how people perceive and view AI. We believe discussions on building a desirable relationship between humans and AI will play a vital role in the process of devising the standards.

Limitations

There are several limitations of this study. While carrying out this study, we used the term AI in a broad sense, although it could be interpreted in many ways depending on its capabilities and functions. In addition, as our participants were all metropolitan Koreans who (mainly actively) volunteered to participate, the result of this study may not be generalizable. We also did not relate this research to previous related events, such as DeepBlue’s chess match and IBM Watson’s Jeopardy win. Further research should be directed toward addressing these limitations. As a first step, we plan to conduct quantitative research investigating the worldwide reaction to AI by crawling and analyzing data from social network sites.

CONCLUSION

This study has attempted to understand people’s fear of AI with a case study of the Google DeepMind Challenge Match. Through a qualitative study, we identified that people showed apprehension toward AI and cheered for their fellow human champion during the match. In addition, people anthropomorphized and alienated AI as an “other” who could do harm to human beings, and they often formed a confrontational relationship with AI. They also expressed concerns about the prevalence of AI in the future. This study makes three contributions to the HCI community. First, we have investigated people’s fear of AI from various perspectives, which can be utilized in various areas. Second, we have identified the confrontational “us vs. them” view between humans and AI, which is distinct from the existing view on computers. Third, we have stressed the importance of AI in the HCI field and suggested the concept of an expanded user interface and algorithmic experience. We hope that this study will draw attention to the emerging algorithmic experiences from many HCI researchers. We also hope that the results of the study will contribute to designing new user interfaces and interactions that involve AI and related technologies.

ACKNOWLEDGEMENTS

This work was supported by Institute for Information & communications Technology Promotion (IITP) grant funded by the Korea government (MSIP) (No.R7122-16-0077).

- LM Anthony, MC Clarke, and SJ Anderson. 2000. Technophobia and personality subtypes in a sample of South African university students. Computers in Human Behavior 16, 1 (2000), 31–44. DOI: http://dx.doi.org/10.1016/S0747- 5632(99)00050- 3

- British Science Association. 2015. One in three believe that the rise of artificial intelligence is a threat to humanity. (2015). Retrieved September 21, 2016 from http://www.britishscienceassociation.org/news/rise- of- artificial- intelligence- is- a- threat- to- humanity.

- Casey C Bennett and Kris Hauser. 2013. Artificial intelligence framework for simulating clinical decision-making: A Markov decision process approach. Artificial intelligence in medicine 57, 1 (2013), 9–19. DOI:http://dx.doi.org/10.1016/j.artmed.2012.12.003

- Mark Brosnan and Wanbil Lee. 1998. A cross-cultural comparison of gender differences in computer attitudes and anxieties: The United Kingdom and Hong Kong. Computers in Human Behavior 14, 4 (1998), 559–577. DOI:http://dx.doi.org/10.1016/S0747- 5632(98)00024- 7

- Mark J Brosnan. 2002. Technophobia: The psychological impact of information technology. Routledge.

- Rory Cellan-Jones. 2014. Stephen Hawking warns artificial intelligence could end mankind. BBC News 2 (2014). Retrieved September 21, 2016 from http://www.bbc.com/news/technology- 30290540.

- Tien-Chen Chien. 2008. Factors Influencing Computer Anxiety and Its Impact on E-Learning Effectiveness: A Review of Literature. Online Submission (2008).

- DeepMind. 2016. AlphaGo. (2016). Retrieved September 21, 2016 from https://deepmind.com/research/alphago/.

- @demishassabis. 2016. #AlphaGo WINS!!!! We landed it on the moon. So proud of the team!! Respect to the amazing Lee Sedol too. Tweet. (8 March 2016). Retrieved September 21, 2016 from https: //twitter.com/demishassabis/status/707474683906674688.

- Danile C Dennett. 2008. Kinds of minds: Toward an understanding of consciousness. Basic Books.

- Steven E Dilsizian and Eliot L Siegel. 2014. Artificial intelligence in medicine and cardiac imaging: harnessing big data and advanced computing to provide personalized medical diagnosis and treatment. Current cardiology reports 16, 1 (2014), 1–8. DOI: http://dx.doi.org/10.1007/s11886- 013- 0441- 8

- OV Doronina. 1995. Fear of computers. Russian Education & Society 37, 2 (1995), 10–28.

- Brian R Duffy. 2003. Anthropomorphism and the social robot. Robotics and autonomous systems 42, 3 (2003), 177–190. DOI: http://dx.doi.org/10.1016/S0921- 8890(02)00374- 3

- Jennifer L Dyck and Janan Al-Awar Smither. 1994. Age differences in computer anxiety: The role of computer experience, gender and education. Journal of educational computing research 10, 3 (1994), 239–248. DOI: http://dx.doi.org/10.2190/E79U- VCRC- EL4E- HRYV

- Leon Festinger. 1962. A theory of cognitive dissonance. Vol. 2. Stanford university press.

- Sylvain Gelly and David Silver. 2011. Monte-Carlo tree search and rapid action value estimation in computer Go. Artificial Intelligence 175, 11 (2011), 1856–1875. DOI: http://dx.doi.org/10.1016/j.artint.2011.03.007

- David Gilbert, Liz Lee-Kelley, and Maya Barton. 2003. Technophobia, gender influences and consumer decision-making for technology-related products. European Journal of Innovation Management 6, 4 (2003), 253–263. DOI: http://dx.doi.org/10.1108/14601060310500968

- Barney G Glaser and Anselm L Strauss. 2009. The discovery of grounded theory: Strategies for qualitative research. Transaction publishers.

- Erico Guizzo. 2011. How google’s self-driving car works. IEEE Spectrum Online, October 18 (2011).

- Mark BN Hansen. 2015. Feed-forward: On the Future of Twenty-first-century Media. University of Chicago Press.

- Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. 2015. Delving deep into rectifiers: Surpassing human-level performance on imagenet classification. In Proceedings of the IEEE International Conference on Computer Vision. 1026–1034.

- RD Henderson, FP Deane, and MJ Ward. 1995. Occupational differences in computer-related anxiety: Implications for the implementation of a computerized patient management information system. Behaviour & information technology 14, 1 (1995), 23–31. DOI:http://dx.doi.org/10.1080/01449299508914622

- Sara Kiesler, Aaron Powers, Susan R Fussell, and Cristen Torrey. 2008. Anthropomorphic interactions with a robot and robot-like agent. Social Cognition 26, 2 (2008), 169.

- Sara Kiesler, Lee Sproull, and Keith Waters. 1996. A prisoner’s dilemma experiment on cooperation with people and human-like computers. Journal of personality and social psychology 70, 1 (1996), 47. DOI: http://dx.doi.org/10.1037/0022- 3514.70.1.47

- René F Kizilcec. 2016. How Much Information?: Effects of Transparency on Trust in an Algorithmic Interface. In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems. ACM, 2390–2395. DOI: http://dx.doi.org/10.1145/2858036.2858402

- Todd Kulesza, Simone Stumpf, Margaret Burnett, and Irwin Kwan. 2012. Tell me more?: the effects of mental model soundness on personalizing an intelligent agent. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems. ACM, 1–10. DOI:http://dx.doi.org/10.1145/2207676.2207678

- Songpol Kulviwat, II Bruner, C Gordon, Anand Kumar, Suzanne A Nasco, and Terry Clark. 2007. Toward a unified theory of consumer acceptance technology. Psychology & Marketing 24, 12 (2007), 1059–1084. DOI: http://dx.doi.org/10.1002/mar.20196

- Ray Kurzweil. 2005. The singularity is near: When humans transcend biology. Penguin.

- Brenden M Lake, Ruslan Salakhutdinov, and Joshua B Tenenbaum. 2015. Human-level concept learning through probabilistic program induction. Science 350, 6266 (2015), 1332–1338. DOI: http://dx.doi.org/10.1126/science.aab3050

- Min Kyung Lee, Daniel Kusbit, Evan Metsky, and Laura Dabbish. 2015. Working with machines: The impact of algorithmic and data-driven management on human workers. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems. ACM, 1603–1612. DOI: http://dx.doi.org/10.1145/2702123.2702548

- Henry Lieberman. 2009. User interface goals, AI opportunities. AI Magazine 30, 4 (2009), 16. DOI: http://dx.doi.org/10.1609/aimag.v30i4.2266

- Manja Lohse, Reinier Rothuis, Jorge Gallego-Pérez, Daphne E Karreman, and Vanessa Evers. 2014. Robot gestures make difficult tasks easier: the impact of gestures on perceived workload and task performance. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems. ACM, 1459–1466. DOI: http://dx.doi.org/10.1145/2556288.2557274

- George A Marcoulides and Xiang-Bo Wang. 1990. A cross-cultural comparison of computer anxiety in college students. Journal of Educational Computing Research 6, 3 (1990), 251–263. DOI: http://dx.doi.org/10.2190/CVYH- M8F9- 9WDV- 38JG

- John Markoff. 2016. How Tech Giants Are Devising Real Ethics for Artificial Intelligence. The New York Times (2016). Retrieved September 21, 2016 from http://www.nytimes.com/2016/09/02/technology/ artificial- intelligence- ethics.html?_r=0.

- Merriam-Webster. 2016. Fear. (2016). Retrieved September 21, 2016 from http://www.merriam- webster.com/dictionary/fear.

- Matthew L Meuter, Amy L Ostrom, Mary Jo Bitner, and Robert Roundtree. 2003. The influence of technology anxiety on consumer use and experiences with self-service technologies. Journal of Business Research 56, 11 (2003), 899–906. DOI: http://dx.doi.org/10.1016/S0148- 2963(01)00276- 4

- Ian Millington and John Funge. 2016. Artificial intelligence for games. CRC Press.

- Volodymyr Mnih, Koray Kavukcuoglu, David Silver, Andrei A Rusu, Joel Veness, Marc G Bellemare, Alex Graves, Martin Riedmiller, Andreas K Fidjeland, Georg Ostrovski, and others. 2015. Human-level control through deep reinforcement learning. Nature 518, 7540 (2015), 529–533. DOI:http://dx.doi.org/10.1038/nature14236

- Michael Montemerlo, Jan Becker, Suhrid Bhat, Hendrik Dahlkamp, Dmitri Dolgov, Scott Ettinger, Dirk Haehnel, Tim Hilden, Gabe Hoffmann, Burkhard Huhnke, and others. 2008. Junior: The stanford entry in the urban challenge. Journal of field Robotics 25, 9 (2008), 569–597. DOI:http://dx.doi.org/10.1002/rob.20258

- Clifford Nass, Jonathan Steuer, Ellen Tauber, and Heidi Reeder. 1993. Anthropomorphism, agency, and ethopoeia: computers as social actors. In INTERACT’93 and CHI’93 conference companion on Human factors in computing systems. ACM, 111–112. DOI: http://dx.doi.org/10.1145/259964.260137

- Clifford Nass, Jonathan Steuer, and Ellen R Tauber. 1994. Computers are social actors. In Proceedings of the SIGCHI conference on Human factors in computing systems. ACM, 72–78. DOI: http://dx.doi.org/10.1145/191666.191703

- Adrian S North and Jan M Noyes. 2002. Gender influences on children’s computer attitudes and cognitions. Computers in Human Behavior 18, 2 (2002), 135–150. DOI: http://dx.doi.org/10.1016/S0747- 5632(01)00043- 7

- Byron Reeves and Clifford Nass. 1996. How people treat computers, television, and new media like real people and places. CSLI Publications and Cambridge university press Cambridge, UK.

- Larry D Rosen and Phyllisann Maguire. 1990. Myths and realities of computerphobia: A meta-analysis. Anxiety Research 3, 3 (1990), 175–191. DOI: http://dx.doi.org/10.1080/08917779008248751

- Stuart Jonathan Russell, Peter Norvig, John F Canny, Jitendra M Malik, and Douglas D Edwards. 2003. Artificial intelligence: a modern approach. Vol. 2. Prentice hall Upper Saddle River.

- Allison Sauppé and Bilge Mutlu. 2014. Design patterns for exploring and prototyping human-robot interactions. In Proceedings of the 32nd annual ACM conference on Human factors in computing systems. ACM, 1439–1448. DOI:http://dx.doi.org/10.1145/2556288.2557057

- David Silver, Aja Huang, Chris J Maddison, Arthur Guez, Laurent Sifre, George Van Den Driessche, Julian Schrittwieser, Ioannis Antonoglou, Veda Panneershelvam, Marc Lanctot, and others. 2016. Mastering the game of Go with deep neural networks and tree search. Nature 529, 7587 (2016), 484–489. DOI: http://dx.doi.org/10.1038/nature16961

- Rashmi Sinha and Kirsten Swearingen. 2002. The role of transparency in recommender systems. In CHI’02 extended abstracts on Human factors in computing systems. ACM, 830–831. DOI: http://dx.doi.org/10.1145/506443.506619

- William Uricchio. 2011. The algorithmic turn: Photosynth, augmented reality and the changing implications of the image. Visual Studies 26, 1 (2011), 25–35. DOI: http://dx.doi.org/10.1080/1472586X.2011.548486

- Eloisa Vargiu and Mirko Urru. 2012. Exploiting web scraping in a collaborative filtering-based approach to web advertising. Artificial Intelligence Research 2, 1 (2012), p44. DOI:http://dx.doi.org/10.5430/air.v2n1p44

- Adam Waytz, Nicholas Epley, and John T Cacioppo. 2010. Social Cognition Unbound Insights Into Anthropomorphism and Dehumanization. Current Directions in Psychological Science 19, 1 (2010), 58–62. DOI:http://dx.doi.org/10.1177/0963721409359302

- Michelle M Weil and Larry D Rosen. 1995. The psychological impact of technology from a global perspective: A study of technological sophistication and technophobia in university students from twenty-three countries. Computers in Human Behavior 11, 1 (1995), 95–133. DOI: http://dx.doi.org/10.1016/0747- 5632(94)00026- E

- Terry Winograd. 2006. Shifting viewpoints: Artificial intelligence and human–computer interaction. Artificial Intelligence 170, 18 (2006), 1256–1258. DOI: http://dx.doi.org/10.1016/j.artint.2006.10.011

- Optimal Workshop. 2016. Reframer. (2016). Retrieved September 21, 2016 from https://www.optimalworkshop.com/reframer.